Is P2P Traffic the Carriers' Red Herring

Right now, Canadian Radio-television and Telecommunications Commission is holding a public proceeding to examine the concept of network neutrality. Google defines network neutrality as:

“…the principle that Internet users should be in control of what content they view and what applications they use on the Internet. Fundamentally, net neutrality is about equal access to the Internet. In our [Google’s] view, the broadband carriers should not be permitted to use their market power to discriminate against competing applications or content.”

This is a crucially important discussion for Canada because its outcome influences the future of innovation in this country. Today, Canadian telecommunications carriers such as Rogers, Cogeco and Bell use deep-packet inspection (DPI) technology to examine the traffic that is being generated by users. Where the carriers see traffic generated by peer-to-peer (P2P) clients such as BitTorrent, they use dedicated DPI hardware (such Ellacoya, now Arbor Networks) to constrain bandwidth available to those applications. This traffic management scheme runs in the network core; therefore it effects both the retail and wholesale clients alike. What does that mean? Well, it means that customers who might have tried to escape the ursine maw of Bell Canada by contracting with Primus for their internet access are still subject to Bell’s traffic management. Through Bell’s Gateway Access Service (GAS), Primus simply acts as a Bell DSL wholesaler. That is, they resell the Bell DSL service to their own clients, who are then subject to anything Bell does on their core network.

My take on all of this is that the whole discussion of P2P is confounding. Carriers will point out that most of the P2P traffic today is used by file swappers who are trading copyrighted files. By doing so, they commit an informal logical fallacy known as the red herring (alternatively, //ignoratio elenchi// -ignorance of refutation). The carriers rely on the extensive media coverage garnered by P2P-related lawsuits to imply that all P2P traffic is the result of illegal file sharing. Further, they suggest that 5% of their users generate approximately 50% of their traffic using P2P applications. They work from those premises to justify traffic management for all P2P traffic, by all users.

Their dissembling aside, I believe there are, in fact, there more likely reasons for their decision:

- New revenue streams

- Capacity reservations

- Enormous oversubscription

New Revenue Streams

While Arbor advertises that one of the features of its DPI technology is to “manage a growing proliferation of bandwidth-intensive applications”, an equally compelling (and far less discriminatory) feature is to “control heavy usage by a minority of subscribers.” So, why would carriers chose to throttle all bandwidth instead of just targeting the few users who they repeatedly site as the reason that traffic management is necessary? Because of another feature offered by Arbor’s product, “Develop competitive yet profitable pricing models based on usage quotas.” Does anyone want to bet that we see P2P add-ons to broadband packages in the near future?

Capacity Reservations

While more capacity on networks could be made available to internet traffic, it’s largely a fixed-fee service. Instead, network capacity can be dedicated to “exciting” new offers like Rogers HD video on demand, or Bell’s Video Store. Surely a mere coincidence.

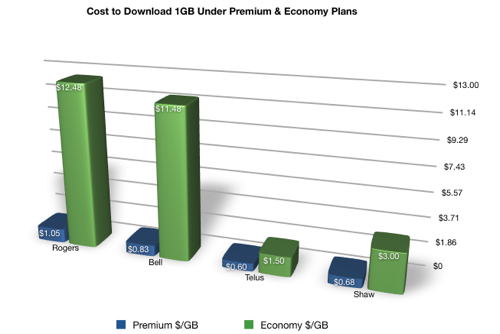

Enormous Over-subscription

By throttling P2P traffic, carriers can continue to advertise and sell services that they cannot credibly deliver. For instance, Rogers and Bell currently sell top-tier broadband plans for $99 and $83 a month respectively, excluding taxes and other fees. These services are advertised at 18Mbps with a 95GB quota (Rogers) and 16Mbps with a 100GB quota. However, if people use the full bandwidth advertised, they’re flagged as a heavy user, which the carriers use to justify the need for traffic management schemes such as the DPI-enabled throttling discussed earlier.

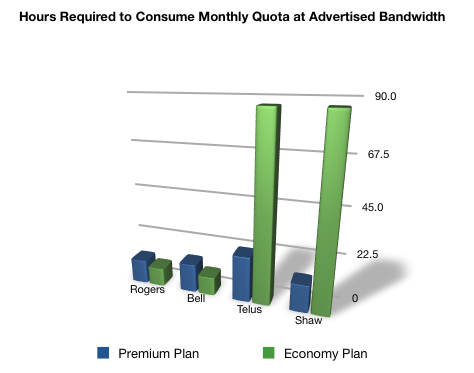

Look at this chart. If it’s possible to consume one’s entire monthly allocation of traffic in less than half a day, it seems a pretty clear indication that any problems in the core are created by too much capacity sold at the edge, and then managed through capping and, now, throttling. Are blazing speeds what people really want? If people want to watch a movie online, content really only needs to be delivered slightly faster than the player shows it. So, perhaps it’s advised to download a 60 minute show in 50 minutes, which allows enough content in the buffer such that no jitter occurs if the connection drops for a moment, or there is congestion on the network. 60-minute TV shows on iTunes are about 400MB. To download that in 50 minutes, requires barely a 1Mbps connection. Why, then, sell a 25Mbps connection with a quota that can be consumed in few hours? Why sell a product that places such strain on the access network that discriminatory throttling becomes the best way to deal with the issue. Something smells fishy.

Move Ahead

Carriers, there are other solutions! Some might even lead customers to like you (or at least not revile you).

- Accelerate upgrades to core infrastructure

- Implement protocol agnostic throttling

- Stop the “arms race” with last-mile bandwidth when you can’t offer it

- Create and sell FlexWidth plans where you have something like 3Mbps during peak times and 20Mbps during non-peak times.

While mine are logical solutions, they humiliate carriers (who have to admit they can’t deliver what they’re selling), and they require coordinated move by all carriers to downgrade advertised capacity – unlikely, right. That’s why it falls upon the CRTC to guide them down the right path. Without network neutrality, innovation, competition and culture are all at risk:

- Developers have to waste time debugging applications that don’t work the same way on their local network as they do over the internet.

- Emerging collaboration tools from IBM and Microsoft that employ P2P technology will not work as expected.

- P2P based data sharing and distributed computing used to discover new pharmaceuticals or find cures for diseases will never emerge, or will developed elsewhere.

- Small producers will struggle to distribute films that large producers would not be able to sell to mainstream audiences.

- Using P2P becomes an inferior way to distribute software like Open Office, Linux, and virtual appliances, devastating competition in these markets, and impinging on the ability of businesses to employ this exciting technology.

- Emerging technologies that use cloud computing to create distributed composite application will be built elsewhere.

There are so many ways that interfering with specific traffic (especially in undisclosed ways) is detrimental that it would take ages to discuss them all. The bottom line is that throttling is not the right approach to take when there are so many better, less invasive (and less expensive!) ways to accomplish similar goals. So, write, call or email your MPs and ask them to support network neutrality.